Judging from #darkhold conversations, other related sources and mentoring several people about it, this is a subject that has confused many. What’s interlacing, what’s progressive, what’s the difference between film and video, how does telecining work, what’s the difference between hard and soft telecine, what’s BFF/TFF/RFF, what does those numbers in DVD2AVI/DGIndex mean, where does those CCNNC letters come from and oh god I’m dizzy…

Contrary to popular belief this actually isn’t rocket science, it’s just insanely obfuscated, full of cryptic acronyms and generally confusing. Topics covered in this post will be:

- Interlaced vs. progressive and the difference between film and video

- Telecining and inverse telecining (IVTC)

- Pulldown flags, progressive vs. interlaced with MPEG2 and PAFF/MBAFF with H.264

- Various other related shit

You can stop reading now and go do something productive instead.

“Real” interlacing

Start at the beginning: interlacing. “Normal” film or video (video as you intuitively would see it) is just a sequence of pictures, also known as frames (this is called progressive). It’s also encoded as such; one picture at a time. Real interlaced video is however a quite different creature; it doesn’t have frames at all. Instead it has fields; each field contains half of the vertical resolution (but both fields have the full horizontal resolution). But it isn’t as easy as top and bottom half of the picture, that wouldn’t be evil enough. Instead each field can be thought of as containing every other horizontal line of a picture (this isn’t quite how it works, but we’ll get to that).

The thing is, a normal camera captures one frame, waits a moment, captures next and so on. An interlaced camera on the other hand captures one FIELD (that is, a “frame” but with half the vertical resolution), waits a moment, captures next field and so on. This means: if there is motion, you can NOT just pair two fields together to get the whole picture! This is important, remember it. So how do you display this without getting weird artifacts (like every other line being out of sync with it neighbors)? There are two possibilities; either you do it on an interlaced display that abuses some facts about how the human vision works to hide the fact that it’s really displaying two different pictures from two different points in time at the same time, or you deinterlace it.

There are several ways to deinterlace, each with their own drawbacks. Some of the more common ones:

- Weave: Does not really deinterlace at all; takes pairs of fields and puts them together (every other line) to one frame. Guaranteed to have artifacts unless the clip is completely static. Avisynth builtin function: weave().

- Field blending: Takes consecutive pairs of fields and blends them together into one frame by averaging pixel values. Usually causes funky ghosting artifacts (since in an interlaced clip, each field is at a temporally separate point). Obviously very blurry since you effectively lose half the spatial resolution as well as half the temporal resolution (blending halves the frame- or fieldrate since every two fields are combined to one frame, so instead of 60 fields/s you get 30 frames/s). Avisynth: Decomb plugin, fielddeinterlace(blend=true).

- Bobbing/line doubling: Doubles the height of each field, effectively making each field its own frame. Also shifts the field slightly up or down so the picture won’t appear to jump up and down slightly. Quality depends on the quality of the resizing filter, but like blending it’s blurry since half the vertical resolution is lost in the upscale. Avisynth builtin function: bob().

- Motion compensation: Uses, well, motion compensation (like in MPEG video compression) in an attempt to take information from neighboring fields to turn pairs of fields into a frame. Avisynth: f.ex. TomsMoComp plugin.

Then there are hybrids, the best of which is probably motion-compensated bobbing with a very high quality resizer (see the Avisynth script MCBob).

Telecining

Back when TV’s were analog only and computers were the size of a flat, there was an enormous difference between cinematic film and television-broadcast video. The former was filmed with big photographic cameras on 35m celluloid film at 24 (not 23.976) frames per second, the latter with electronic cathode ray tube cameras at 59.94 (NTSC, the US) or 50 (PAL, Europe) interlaced fields per second. One was shown with cinematic projectors, the other with cathode ray tube televisions. It wasn’t easy to get from one to the other.

TERMINOLOGY: some literature calls all truly interlaced material VIDEO, as opposed to non-interlaced material which is called FILM. This derives from the historical reasons above; video was stuff filmed with video cameras and shown on TV, film was filmed on, well, film and shown on cinema.

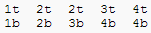

While everything above so far has applied to both PAL and NTSC, I will now move on to talk exclusively about NTSC. Since people wanted to watch movies on their TV sets, a process (called telecining) to convert 24 frames/s film to 59.94 fields/s interlaced video was invented. Each frame is then split into two fields; the first field gets all the even numbered horizontal lines (with 0 being the topmost line) and the second the odd numbered (this is called top field first; the inverse is obviously bottom field first). Now we have 48 fields per second, which still doesn’t quite cut it. We need 25% more fields per second to reach 60 fields/s, where to get them? Speeding the movie up by 25% obviously won’t go very well. Instead we take 8 fields at a time and duplicate two of them, netting us 10 fields and our desired 60 fields/second, which is then slowed down slightly to the NTSC standard ratio of 30000/1001 fields/s. The duplicating is traditionally done as follows (the numbers are frame numbers, t stands for top field and b for bottom field):

Note that we don’t just duplicate an entire original frame; that would make the result look jerky in pans and such. Instead one field at a time is duplicated, since this is much less visible on an interlaced display.

Inverse telecining involves detecting which fields belong to which original frames, matching them together (field matching) and removing the superfluous fields (decimating). If we take the example above and do the field matching from the bottom field, we’ll get a pattern like this (where C stands for “match field from Current frame” and N stands for “match field from Next frame”):

Note that we’ll end up with a duplicate frame here (#4) which we can remove later, resulting in a 29.97->23.976 fps slowdown. If we don’t do this we’ll get one duplicate every 5 frames and that will make pans and other continous motion look jerky.

Alternatively we could match from the top field and end up like this (where P stands for previous):

Same thing, really.

PAL telecining works similarly but you only need two extra fields every 48 instead of two extra every 8.

Telecining and interlacing on DVD

Back when DVD was designed, the most important thing was compatibility with existing equipment; that meant compatibility with analog NTSC televisions. Hence, a DVD player must output a 59.94 fields/s analog NTSC signal. However, the DVD itself isn’t analog and it was decided to allow progressive encoding as well and let the DVD player worry about the output signal.

For this reason, every frame on a DVD (or really, in any MPEG2 stream) can be encoded in two ways: interlaced or progressive. If a 24 fps movie is encoded as progressive, there are some flags (repeat field flag or RFF) set in the stream that tell the player that certain frames should be split into fields and one of those fields be repeated during playback; effectively letting the player do telecining on the fly. If you’re familiar with DVD2AVI or DGIndex, it’ll tell you the coding type under the “frame type” heading when previewing or saving a project file; it’ll say either “interlaced” or “progressive”. However (and this is quite possibly the most important point in this entire wall of text), the coding type does not tell you what type of content you are dealing with!

In an ideal world, cinematic movies (and anime) should always be encoded as progressive with the proper RFF flags set (known as “soft telecining”), since this lets users with progressive displays view the source without deinterlacing (it also makes ripping easier, hur hur hur). If such flags are present DVD2AVI/DGIndex will say FILM under the video type header; if they are not, it’ll say NTSC (or PAL). Reality unfortunately disappoints. It’s very common to see “hard telecined” material, i.e. it was telecined in the studio and the entire DVD (not just the frames with repeated fields) is coded as interlaced. This is suboptimal in several ways, not only for people with progressive displays but also from an encoding standpoint in several ways (problems include worse chroma subsampling and more overhead from the interlaced coding). DGIndex will report this as interlaced, but that does not mean you should use a deinterlacing filter on it. Instead you should use an inverse telecining filter combination like TIVTC.

Other more or less common fuckups on the DVD studio’s part include coding interlaced material as progressive (looks horrible since there’ll be a lot of extremely sharp edges where there’s any motion), taking interlaced material, deinterlacing it with a terrible blending deinterlacer and coding the now eyecancer-inducing progressive result as progressive, or a particular personal favorite of mine: taking interlaced material, deinterlacing it with an absolutely terrible deinterlacer that discards every other field and interpolates the other, and coding the “progressive” result as interlaced on the DVD (the best solution for this was to discard the broken fields and re-interpolate them from the untouched ones with NNEDI).

H.264 is a fair bit more sophisticated than MPEG2, and allows not only encoding some frames as progressive and some as interlaced (in H.264 terms known as PAFF, Picture-adaptive frame-field coding), but also allows coding of parts of frames as interlaced and other as progressive (known as MBAFF, Macroblock-adaptive frame-field coding).

I reiterate: THE ONLY WAY TO DETERMINE WHAT YOU’RE DEALING WITH IS TO ACTUALLY LOOK AT IT.

How to tell interlacing from interlacing

If DGIndex/DVD2AVI reports 100% progressive film or NTSC you’re most likely dealing with one of the good ones; you just need to tell the indexing program to ignore RFF flags and blammo you have your progressive stream, no inverse telecining or decimating necessary. The setting is called “Force FILM”. This is usually the only thing you need to check for; for everything other than 100% film the process is mostly the same.

If it reports 100% interlaced NTSC, you’re either dealing with actually interlaced material or a “hard telecine”. They’re easy to tell apart; aside from the fact that interlaced material will look, well, interlaced on every single frame where there’s motion (telecined material will look interlaced one or two frames out of five due to the repeated fields), there’s another reliable way of telling. Use the following Avisynth script:

mpeg2source("x.d2v")

separatefields()

selecteven()

(separatefields() makes each field into its own frame, and selecteven() discards odd numbered frames)

Load it in Virtualdub or something, find a pan or something with movement every frame and step through it. If you see a duplicate field every 4, you have something telecined. If you don’t see any duplicates, you’re dealing with something interlaced (or 29.97fps progressive content coded as interlaced).

In general the first thing you should do when trying to find out the nature of something is separatefields() and some theorycrafting on what it could be and what that could look like.

One thing that makes all of this more interesting is the industry’s habit of mixing different types of material. You can have:

- 23.976 fps progressive material coded as progressive with RFF’s

- 23.976 fps progressive material hard telecined and coded as interlaced

- 29.97 fps progressive material coded as progressive without RFF’s

- 29.97 fps interlaced/progressive hybrid material coded as interlaced

- 59.94 fields/s pure interlaced material coded as interlaced

- etcetera

all on the same DVD.

A particularly nasty (and unfortunately common) combination is hard telecined progressive material mixed with 59.94fps interlaced studio credits scrolling over a 23.976fps progressive but hard telecined background, with the entire thing being coded as interlaced. This is really hard to fix properly and usually involves either using a creditless version or hitting the entire section with a deinterlacer like NNEDI and hope it doesn’t fuck up the background too much. You’ll just have to deal with the jerkiness caused by the 23.976fps background being displayed at 29.97 fps with duplicate frames.

I don’t really know how to finish this theoretical rant so have some references

- bond talks about PAFF/MBAFF on doom9

- LurkerTech guides to field-based video

- Lots of pictures of various interlacing artifacts on 100fps.com (PAL stuff only, also outdated in some ways)

- The TIVTC manual has a very helpful “theory of of operation” section about telecining and different matching possibilites.

- Deinterlacing filter examples by Michael Niedermayer (FFmpeg maintainer)

Comments (19)

I see nothing new here :o

its still nice to have this much info on one page instead of spread all over doom9, avisynth wiki, etc.

its appreciated.

@edogawaconan: that’s because there isn’t anything new, I just tried to clarify it for people who didn’t get it. :V

Yup, seven :)

Thanks TheFluff.

I can now say with confidence that when the time comes for me to have to use YATTA, I’ll be able to easily learn how to use it :p

TheFluff is alive, eh. Good news indeed.

Thank you very much, very helpful.

thanks dude. REALLY appreciated.

Are there any tools to read a dvd containing a mix of content as VFR?

Today I was encoding a DVD. The content consists of a bunch of 24fps progressive followed by maybe 20% 60fps interlaced.

As far as I can tell the DVD’s original encoders have dealt with this perfectly – it’s encoded like that, a 24fps progressive section with the correct flags, and then a 30fps interlaced section. But I’ve resorted to encoding as CFR and manually creating a timecodes file with which to make an MKV, because I can’t find anything that will load it as VFR.

What do you mean load it as VFR?

What you describe (marking out 30fps/24fps sections manually and writing or automatically generating a timecodes file) is pretty much standard practice; as far as I know there are no (reliable) tools that can do it automatically for you.

Well, ideally I’d like to be able to process throughout with tools that understand VFR, but I realise that’s a more general problem, and VFRaC works well enough for most purposes. Given that, I was hoping for something that would generate the timecodes file automatically from the dvd/mpeg source; since the player is able to notice the transition (and output a message about it, in the case of mplayer), I would (perhaps naively) have expected this to be easy.

OMG Thank you for the exhaustive collection of information all in one place. It’s much appreciated and answers a number of questions I didn’t even know I had. I had not gotten as far as going from one site to another site with one question to another site where I’d find half the answer and three more questions. (e.g. software docs, doom9, mplayerhq, etc etc.)

Have a quick question regards field matching and its pattern, if youre matching the bottom field, you said you get this pattern ccnnc, and matching the top field, you said you get this pattern ccppc…right?

so this means if i use the pattern guidance of yatta, should i use the appropriate video’s type of field with respect to its pattern.

eg.

Field Order: 1/Top Field First = ccppc

Field Order: 0/Bottom Field First = ccnnc

Sorry if i ask this in here, Im just making sure I understand this part of field matching since nmap didnt specify in his yatta manual if ccnnc pattern should be use in both field type: top and bottom. I appreciate if you could clarify it because ive been using ccnnc pattern for both type of fields.

*editted this part*

examples video with…

1.Field Order: 1/Top Field First = ccppc

2.Field Order: 0/Bottom Field First = ccnnc

forgot to ask this, if youre working on yatta, what ivtc plugins do you usually use, the old telecide and decomb or the newly tivtc (tfm and tdecimate). also, on vfr videos, what usually use those on those to get the necessary metrics on yatta with.. decomb?

@brooke,

Do not use tivtc’s tfm on yatta because it doesnt produce much good results when doing auto pattern guidance for you on yatta since tfm uses only c and p when field matching. (i know you could manually set the p to n by using the switch on the yatta but thats fckn hassle, just use decomb (telecide) which saves you from that hassle.

Go try both, telecide and tfm, and you would see bunch of P instead of n on tvitc in the pattern line, while decomb has bunch of N and some P on pattern line.

as for decimating, either you use decomb’s decimate or tivtc’s tdecimate is fine.

@kailAN

you’re p dumb

just for your information tivtc is vastly superior to decomb in pretty much every way, and as an additional bonus it’s not made by neuron2.

to solve your “problem” with pattern guidance, in ymc, go to the tfm config and set the “match” to the opposite of order, i.e. if order is 1 set it to B and vice versa. boom, instant ccnnc instead of the default ccppc.

Well, forgive my p ignorance since i didnt know thats how you solve that problem. it has never mentioned or asked on yatta forum, but good thing you mentioned it and id start using tivtc on yatta once again. :D

Thanks a lot ^^

THANK YOU for this. I’m trying to learn how to do decent encoding and avisynth scripting, and this wall of text really saved me a shitload of time.